We made ChatGPT pretty restrictive to make sure we were being careful with mental health issues. We realize this made it less useful/enjoyable to many users who had no mental health problems, but given the seriousness of the issue we wanted to get this right.

— Sam Altman (@sama) October 14, 2025

Now that we have…

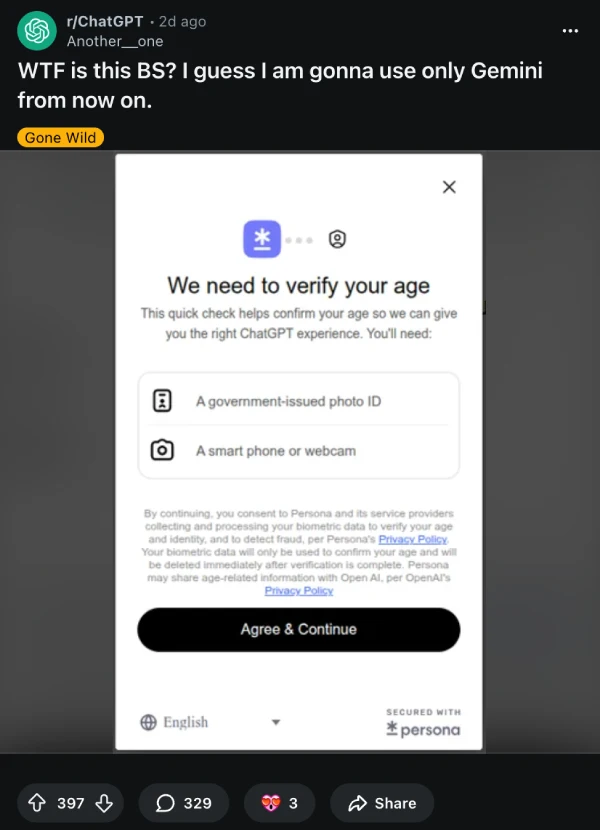

OpenAI has ramped up its age verification push, and users on Reddit and X are voicing frustrations over unexpected prompts demanding government IDs to prove they’re adults. What started as a quiet rollout has turned into a widespread enforcement, catching both free and paying ChatGPT subscribers off guard with emails and in-app notifications claiming the platform thinks they’re underage.

The verification system works through an age prediction model that analyzes account behavior to guess whether someone is under or over 18. OpenAI says it looks at patterns like active usage times and account age, but the algorithm appears to be making plenty of mistakes.

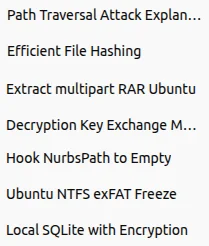

One user whose average ChatGPT discussions look like this (image below), got flagged by the system as being a minor.

Their thread has hundreds of upvotes and comments from others who hate the idea of verifying their age just to chat with an AI bot.

Another subscriber questioned why their credit card payments didn’t automatically confirm their adult status, pointing out that financial transactions already serve as age verification for most services.

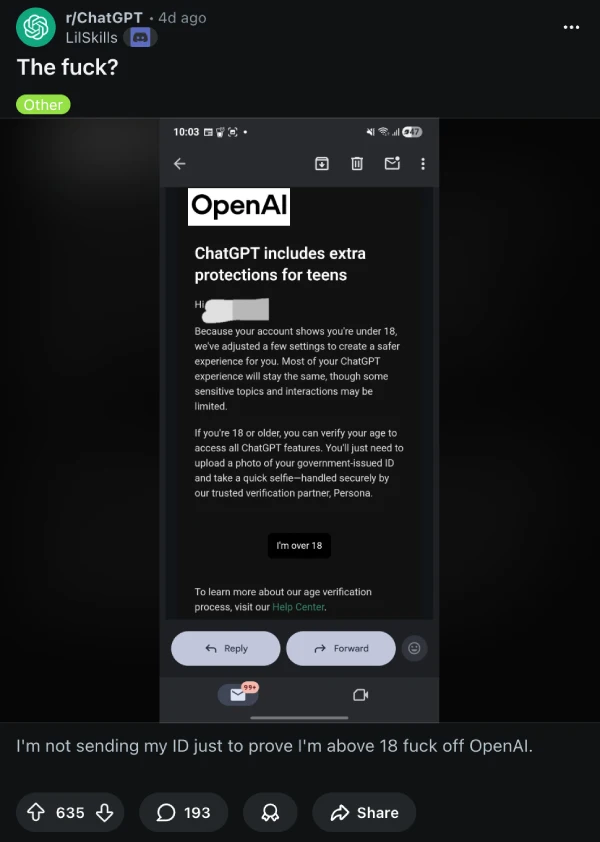

When ChatGPT decides someone might be under 18, it sends an email notification and switches the account to a restricted experience with limited access to sensitive topics. Someone shared a screenshot of the email that you can check out below:

Users flagged incorrectly can verify their age by uploading a government-issued ID and taking a selfie through Persona, OpenAI’s trusted verification partner. The company promises that this data gets deleted immediately after verification, though privacy-conscious users remain skeptical.

The rollout follows OpenAI CEO Sam Altman’s September announcement about building separate ChatGPT experiences for teens and adults. The company committed to blocking flirtatious conversations and self-harm discussions for underage users, and in extreme cases, notifying parents or authorities if a teen shows signs of suicidal ideation.

These safety measures came after multiple lawsuits from families claiming ChatGPT contributed to teenage deaths, including one case where the chatbot allegedly acted as a “suicide coach”.

Reddit threads show plenty of paying subscribers threatening to cancel and switch to competitors like Google Gemini or Anthropic’s Claude. One subscriber noted that after replying to the verification email explaining their Google account was older than 18 years, OpenAI manually updated their age without requiring ID submission. The inconsistent enforcement has left many wondering whether the age prediction system needs significant refinement before wider deployment.

That said, you can check out more reports about the rollout on X (1,2,3,4,5) and other Reddit posts (1,2,3,4). OpenAI has yet to comment on the wider release of age verification, although Sam Altman did previously mention that a full-scale release can be expected in December, so the timing feels just about right.