Recent developments suggest Google is equipping the Pixel Tablet with a new way to interact with the Google Assistant. This functionality, tentatively named “Look and Sign,” will revolutionize how users interact with the Google Assistant on Pixel Tablet.

The “Look and Talk” feature, currently available on the Nest Hub Max, has yet to be integrated into the Pixel Tablet. This technology utilizes the device’s camera to discern, on a local level, when a user is both looking directly at the screen and speaking. While preliminary indications of “Look and Talk” under development for the Pixel Tablet surfaced months ago, its official launch remains pending.

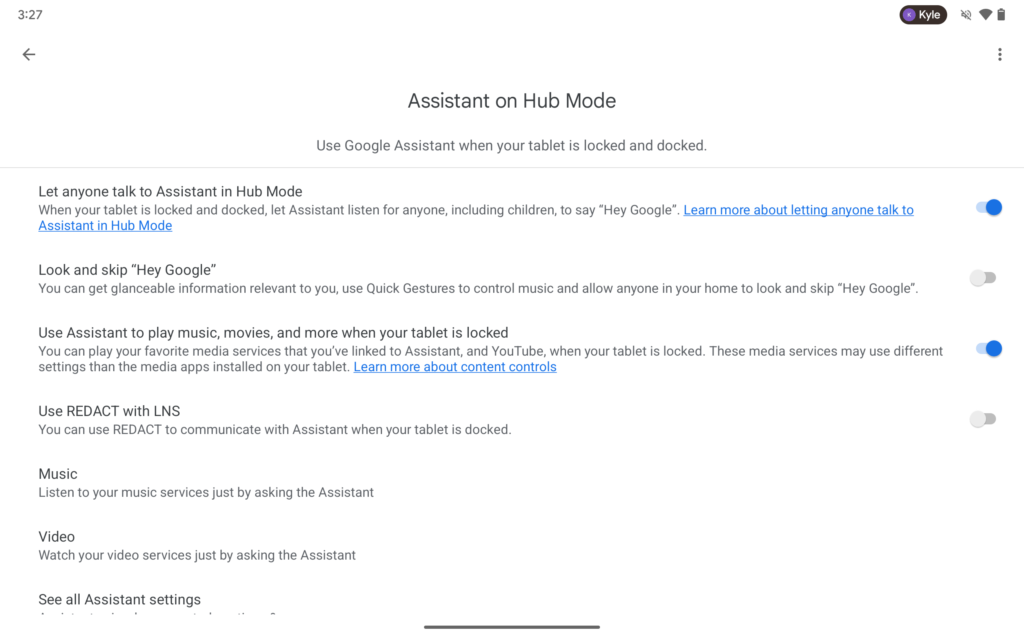

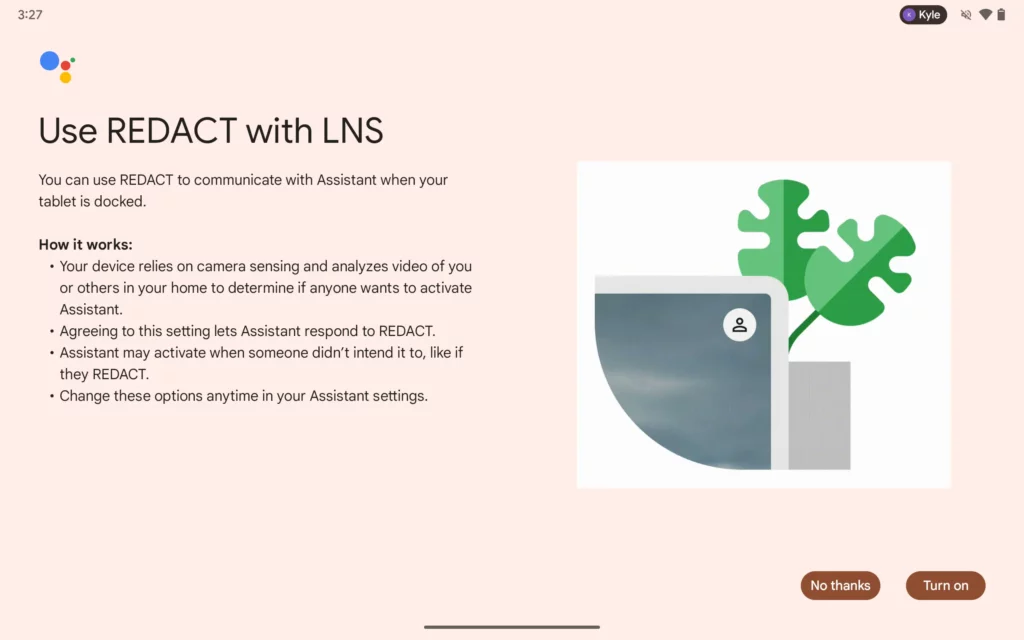

In the latest beta update to the Google Search app, version 15.15, changes in the app’s text hint at a transition from the “Look and Talk” feature to the new “Look and Sign.” This development was unearthed by 9to5Google, which managed to forcibly enable this feature, now visible in the “Assistant on Hub Mode” settings. Interestingly, Google has chosen to redact parts of the associated texts, perhaps to maintain an element of surprise or confidentiality regarding its full capabilities.

“Look and Sign” suggests two potential directions for user interaction on Pixel Tablet. The first possibility is the introduction of hand gestures as substitutes for verbal commands. Users might soon be able to initiate interactions with Google Assistant through simple gestures like pointing, giving a thumbs up, or other distinct motions. This approach could streamline the way users engage with their devices, making the process more intuitive and less reliant on spoken language.

Alternatively, the term “sign” could imply a more groundbreaking approach—enabling interaction through sign language. Such an advancement would be a monumental stride in making technology accessible to the deaf and mute communities. Interpreting sign language requires complex processing and a sophisticated understanding of human gestures, a challenge that Google has previously tackled in other projects.

By employing machine learning algorithms, Google could potentially create a platform where sign language is seamlessly translated into commands for the Assistant, thereby broadening the scope of its usability. Regardless of the specific implementation, Google’s focus on developing a feature that prioritizes Assistant interaction is an intriguing development for the Pixel Tablet users.

Inline Image source: 9to5Google